[See Solution] A large discount chain compares the performance of its credit managers in Ohio and Illinois by comparing the mean dollar amounts owed by customers

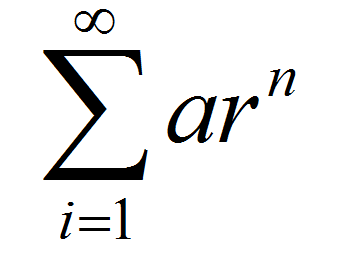

Question: A large discount chain compares the performance of its credit managers in Ohio and Illinois by comparing the mean dollar amounts owed by customers with delinquent charge accounts in these two states. Two random samples of 10 delinquent accounts are selected from all delinquent accounts in Ohio and Illinois. The sample of Ohio gives a mean dollar amount of $524 with a standard deviation of $68. The second sample from Illinois yields a mean dollar amount of $473 with a standard deviation of $22. (i) Calculate a 95% confidence interval for the difference between the mean dollar amounts owed in Ohio and Illinois; (ii) Can we conclude at a 5% level of significance that there is a difference between the mean dollars owed by customers with delinquent charge accounts in Ohio and Illinois?

Deliverable: Word Document

![[Step-by-Step] Professor Brunner had students in his Marketing class [Step-by-Step] Professor Brunner had students in his](/images/solutions/MC-solution-library-48393.jpg)

![[See Solution] An Ohio university wishes to demonstrate that car [See Solution] An Ohio university wishes to](/images/solutions/MC-solution-library-48394.jpg)