In "The causes of corruption: a cross-national study" (2000, Journal of Public Economics, 76: 399-457)

Problem 1:

In "The causes of corruption: a cross-national study" (2000, Journal of Public Economics, 76: 399-457) Daniel Treisman examines possible determinants for perceived corruption. Download the Treisman data (treisman. csv) from the course website and read the data into R or Stata.

The key variables are as follows:

- TI98: Perceived corruption in 1998 as measured by the Transparency International 'poll of polls' index.

- commonlaw: Indicator for whether the country has a common law tradition as opposed to a civil law tradition;

- britcolony: Indicator for whether the country was formerly a British colony;

- noncolony: Indicator for whether the country was not formerly a colony;

- pctprot: Percentage of the country's population which is protestant;

- elf: 1960 Ethnolinguistic fragmentation (the probability that two randomly sampled individuals will not belong to the same ethnolinguistic group);

- FMMexports93: Proportion of exports due to fuel, metal, and minerals;

In this question we will examine the results for the britcolony variable within the minimal specification used in the paper. We will leave aside questions of causality and assume that we are interested in the model that includes these particular variables.

-

Basic OLS Replicate the coefficients in the 1998 Model 1 from Table 3 of the paper by using the \(\operatorname{lm}\) ()

(R) or reg (Stata). Provide a non-causal interpretation of the britcolony coefficient. - Diagnosing Heteroskedasticity

- Provide a scale-location plot for the regression you ran in Part A. Explain what you observe and what the plot indicates with regards to the assumption of constant variance.

- Provide a plot of the fitted values vs. the residuals for the regression you ran in Part A. Explain what you observe and what the plot indicates with regards to the assumption of constant variance.

C) Huber-White Heteroskedasticity-Robust Standard Errors Replicate the standard errors in the table using the hccm (, type=" "hc1"') function (in R) or the robust option in Stata, and comment on the difference between the britcolony standard error in the table and from the \(\operatorname{lm}()\) function. Provide one plausible explanation of why the Huber-White standard errors are smaller.

- Exploring Different Small Sample Corrections As mentioned in lecture, there are multiple small sample versions of heteroskedasticity-robust standard errors. The version of small sample correction you found above is often termed as HC1. HC1 involves a degrees of freedom correction such that the HuberWhite heteroskedasticity-robust variance-covariance matrix is scaled using the following factor: \(\frac{n}{n-(k+1)}\), where $n$ is the sample size and \(k\) is the number of explanatory variables. However, simulation studies have shown that HC1 gives inaccurate standard errors at sample sizes for \(n<250\) when high heteroskedasticity is present (although still better than the Huber-White variance-covariance matrix without a small sample correction). The necessary sample size is smaller with less heteroskedasticity. Two alternative heteroskedasticity consistent standard errors are HC2 and HC3.

Calculate HC2 and HC3 heteroskedasticity-consistent standard errors in R using hccm (, type= '’h c’’) and hccm(, type='’hc3’’), and in Stata as hc2 and hc3 instead of the robust option. Report the results in two tables and comment on your findings. How do these standard errors compare to HC1 errors and to the uncorrected OLS standard errors?

D) Weighted Least Squares Estimator Replicate the coefficients in the 1998 Model 1 from Table 2 using the weighted least squares. In R you can do this using the weights argument of the \(\operatorname{lm}\) function: \(\operatorname{lm}\) ( \(\ldots\), weights =1 /TI98variance). In Stata, you can do this using the aweight option: reg ti98 commonlaw ...fmmexports93 [aweight=1/ti98variance].

E) Would you prefer to report the results from Part A, Part C, or Part D? Justify your decision.

F) Diagnosing Non-Normality

- Make a histogram of the residuals. Evaluate the plausibility of the normality assumption. Provide some intuition for your finding.

- Provide a normal Q-Q plot for the regression you ran in Part A. Explain what you observe and what the plot indicates with regards to the assumption of normality.

G) Diagnosing Non-Linearity Using techniques learned in this course, attempt to diagnose non-linear relationships for the independent variables (you do not have to check for interactions between the variables). Why is linearity trivially satified for commonlaw, britcolony, and noncolony variables?

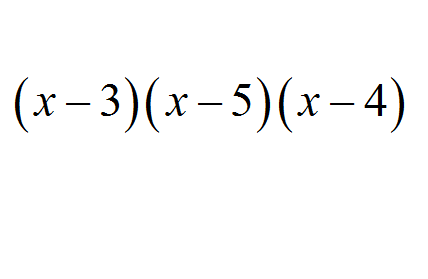

- If you find evidence of non-linearity attempt to correct it by including polynomial terms in the regression. Are the results from your new model consistent with previous results? If not, which results, positive or negative are not the same?

Problem 2:

Suppose you lose some outcome data (for TI98). You do not know the missingness mechanism. None of the data for covariates is lost. The dataset with missingness that you should use for this problem is treisman_missing.csv.

- Provide a (made-up) story regarding the missingness mechanism that would justify each of the following assumptions regarding the nature of missingness:

- MCAR

- MAR

- NMAR

B) Create a table of the following form that contains means of the covariates across two groups: those that are missing the TI98 outcome and those that are not. Then provide the difference in means. In no more than a couple of sentences, comment on what you observe.

C) Given your results in Part B, which assumption from Part A do you believe most in this context, and why?

For Parts D-F, we are going to estimate both the mean of TI98 and the coefficient on britcolony from the simple regression in Part A of Problem 1, but using various missing data techniques to deal with missingness in the outcome. You may find it helpful to create a missingness indicator variable.

D) Estimate the mean of TI98 and the regression coefficient on britcolony using a complete-case analysis.

E) Estimate the mean of TI98 and the regression coefficient on britcolony using mean imputation of the outcome.

F) Estimate the mean of TI98 and the regression coefficient on britcolony using regression mean imputation (given all covariates as in Part A of Problem 1). That is, first run a regression on the complete-case data as in Part D. Then use regression coefficients to predict missing values of TI98. Finally, compute the mean of TI98 and also run a regression on the imputed dataset and report the coefficient on britcolony.

G) What is the problem with all of the procedures above in terms of the uncertainty we obtain on the estimated britcolony coefficient?

Deliverable: Word Document

![[Solution Library] Washington (American Economics Review, 2008) [Solution Library] Washington (American Economics Review, 2008)](/images/solutions/MC-solution-library-82406.jpg)

![[See Steps] Z is a random variable which is distributed standard normal, [See Steps] Z is a random variable](/images/solutions/MC-solution-library-82407.jpg)