[Solution Library] Least squares with smoothness regularization. Consider the weighted sum least squares objective \|A x-b\|^2+λ\|D x|^2 where the n -vector

Question: Least squares with smoothness regularization. Consider the weighted sum least squares objective

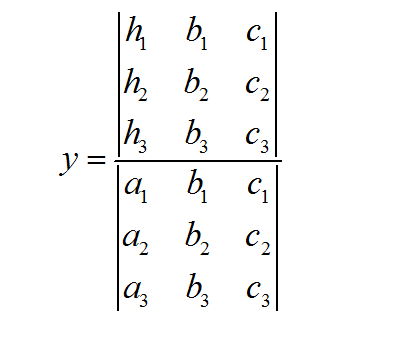

\[\|A x-b\|^{2}+\lambda\|D x\|^{2}\]where the \(n\) -vector \(x\) is the variable, \(A\) is an \(m \times n\) matrix, \(D\) is the \((n-1) \times n\) difference matrix, with \(i\) th row \(\left(e_{i+1}-e_{i}\right)^{T}\), and \(\lambda>0\). Although it does not matter in this problem, this objective is what we would minimize if we want an \(x\) that satisfies \(A x \approx b\), and has entries that are smoothly varying. We can express this objective as a standard least squares objective with a stacked matrix of size \((m+n-1) \times n\) Show that the stacked matrix has linearly independent columns if and only if \(A 1 \neq 0\),

i.e., the sum of the columns of \(A\) is not zero.

If \(A 1=0\), then \(S 1=0\) so the stacked matrix does not have linearly independent columns. So the condition is necessary.

To show it is sufficient, assume \(A 1 \neq 0 .\) Suppose that \(S x=0\). This is the same as \(A x=0, \sqrt{\lambda} D x=0\). Since \(\lambda>0\), the second condition reduces to \(D x=0 .\) But this is only possible if \(x\) is a constant vector, i.e., \(x=\alpha 1\) for some scalar \(\alpha\). From the first equation \(A(\alpha 1)=0\), and since \(A 1 \neq 0\), this means \(\alpha=0 .\) Therefore \(S x=0\) only if \(x=0\), so \(S\) has linearly independent columns.

Deliverable: Word Document

![[Steps Shown] Greedy regulation policy. Consider a linear dynamical [Steps Shown] Greedy regulation policy. Consider a](/images/solutions/MC-solution-library-78448.jpg)

![[Solved] Modifying a diet to meet nutrient requirements. We consider [Solved] Modifying a diet to meet nutrient](/images/solutions/MC-solution-library-78449.jpg)

![[Solution Library] Minimum cost trading to achieve target sector exposures. [Solution Library] Minimum cost trading to achieve](/images/solutions/MC-solution-library-78450.jpg)

![[Solved] Chloe wishes to obtain a loan to purchase a house. Her [Solved] Chloe wishes to obtain a loan](/images/solutions/MC-solution-library-78452.jpg)